L’apprentissage profond en reconnaissance d’images est une technologie puissante qui permet de résoudre des problèmes complexes. Découvrez les techniques et les défis associés à cette technologie.

Dans le vaste royaume de l’intelligence artificielle, l’apprentissage profond est devenu un jeu-changer, en particulier dans le domaine de la reconnaissance d’images. La capacité des machines à reconnaître et à catégoriser des images, à la manière du cerveau humain, a ouvert une multitude d’opportunités et de défis. Plongeons-nous dans les techniques que l’apprentissage profond offre pour la reconnaissance d’images et les obstacles qui y sont associés.

Data: For CNNs to work, large amounts of data are required. The more data that is available, the more accurate the results will be. This is because the network needs to be trained on a variety of images, so it can learn to recognize patterns and distinguish between different objects.

Hurdles: The main challenge with CNNs is that they require a lot of data and computing power. This can be expensive and time-consuming, and it can also lead to overfitting if not enough data is available. Additionally, CNNs are not able to generalize well, meaning they are not able to recognize objects that they have not been trained on.

Réseaux de neurones convolutionnels (CNN)

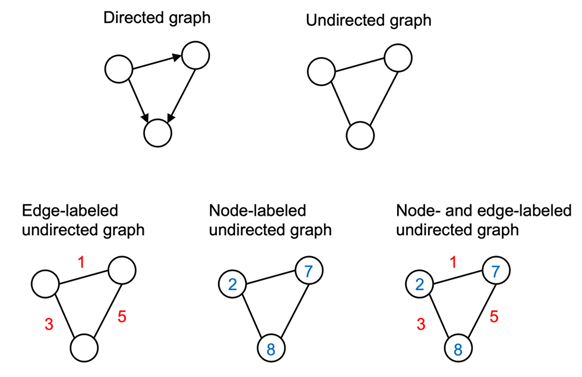

Technique : Les CNN sont le pilier des systèmes de reconnaissance d’images modernes. Ils se composent de plusieurs couches de petites collections de neurones qui traitent des parties de l’image d’entrée, appelées champs réceptifs. Les résultats de ces collections sont ensuite assemblés de manière à se chevaucher, afin d’obtenir une meilleure représentation de l’image d’origine ; c’est une caractéristique distinctive des CNN.

Données : Pour que les CNN fonctionnent, des quantités importantes de données sont nécessaires. Plus il y a de données disponibles, plus les résultats seront précis. C’est parce que le réseau doit être formé sur une variété d’images, afin qu’il puisse apprendre à reconnaître des modèles et à distinguer différents objets.

Hurdles : Le principal défi avec les CNN est qu’ils nécessitent beaucoup de données et de puissance de calcul. Cela peut être coûteux et prendre du temps, et cela peut également entraîner un surajustement si pas assez de données sont disponibles. De plus, les CNN ne sont pas en mesure de généraliser bien, ce qui signifie qu’ils ne sont pas en mesure de reconnaître des objets qu’ils n’ont pas été formés.

Réseaux neuronaux profonds (DNN)

Technique : Les DNN sont une variante des CNN qui peuvent être utilisés pour la reconnaissance d’images. Ils sont constitués de plusieurs couches de neurones qui traitent des parties de l’image d’entrée et produisent des résultats plus précis que les CNN. Les DNN peuvent également être utilisés pour la classification d’images et la segmentation d’images.

Données : Les DNN nécessitent également des grandes quantités de données pour fonctionner correctement. Cependant, ils peuvent être entraînés sur des jeux de données plus petits que les CNN et peuvent donc être plus efficaces lorsqu’il n’y a pas assez de données disponibles.

Hurdles : Le principal défi avec les DNN est qu’ils nécessitent beaucoup de temps et de puissance de calcul pour être entraînés correctement. De plus, ils sont sensibles aux bruit et aux variations dans les données d’entrée, ce qui peut entraîner des résultats imprécis.

Computers today can not only automatically classify photos, but they can also describe the various elements in pictures and write short sentences describing each segment with proper English grammar. This is done by the Deep Learning Network (CNN), which actually learns patterns that naturally occur in photos.

Computers today can not only automatically classify photos, but they can also describe the various elements in pictures and write short sentences describing each segment with proper English grammar. This is done by the Deep Learning Network (CNN), which actually learns patterns that naturally occur in photos.