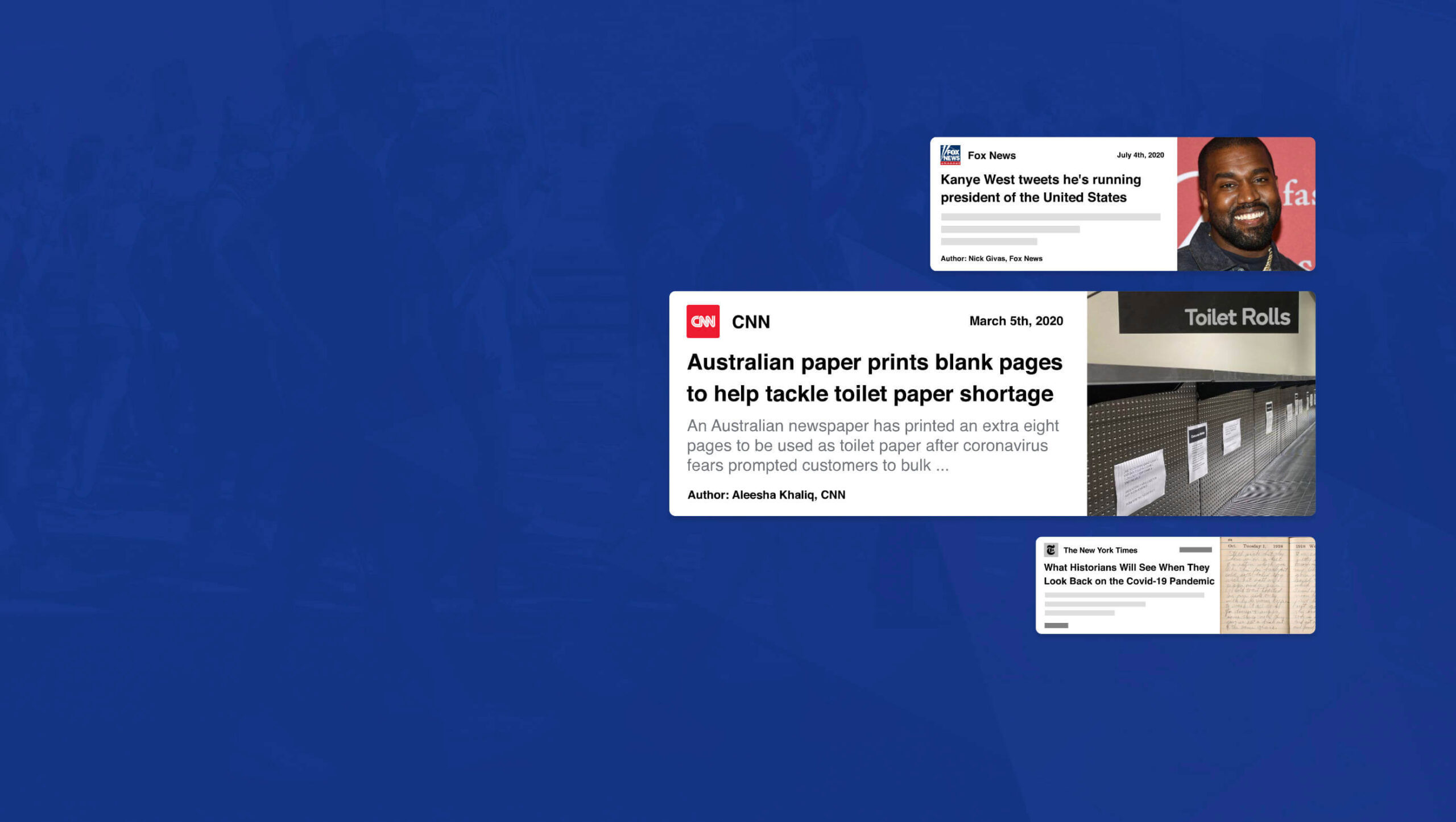

What an extraordinary year 2020 has been for the news! From the ongoing coronavirus crisis, to a turbulent US election, to the unrelenting march of Bitcoin, this year like no other we’ve been glued to our phones micro-analyzing every tidbit of news.

What an extraordinary year 2020 has been for the news! From the ongoing coronavirus crisis, to a turbulent US election, to the unrelenting march of Bitcoin, this year like no other we’ve been glued to our phones micro-analyzing every tidbit of news.

Which makes this the perfect time for mediastack, an awesome REST API that allows you to embed a customizable news feed, sourced from the world’s top news agencies, and updated by the minute, right on your site.

Integrating Global News with Your Site

News is the beating pulse of so many global industries. From political decisions that affect stock prices, to natural disasters that interrupt goods and services, to the whims of celebrities who overnight transform brands from unknown to must-have.

Whether you’re building a site for a non-profit in Louisiana that cares deeply about both Washington politics, and hurricanes in the Caribbean; or you’re building an app for a golf course in Halkidiki that’s focused on both local news, and golf around the world; delivering real-time news content to those users elevates UX.

Tightly integrating the news with your site makes it a hub for users hungry for that very news. The only limit is your creativity.

Display Up-to-Date News on Your Site

When news breaks around the world the top networks scramble to catch up; they simply can’t maintain correspondents in every town and city in the world, and so they rely on affiliates. mediastack pulls in news from over 7,500 different sources in over 50 countries worldwide, giving you access to exactly the same affiliates frequently used by big news organizations like CNN, MSNBC, BBC, or ABC.

When it’s one of the big players in news that breaks a story first, mediastack still has you covered because as will as covering smaller, lesser-known sources mediastack delivers real-time news from CBS, Sky News, The Guardian, Al Jazeera, USA Today, and a host of trusted names across the industry.

If your site targets users that are only interested in certain types of story — like sports, or Hollywood celebrities — then you can even pull in stories from ESPN, TMZ, or Fox News.

Get Started Quickly with mediastack

Getting started with mediastack couldn’t be simpler, and there’s a free plan that’s more than enough to prototype your project.

Full documentation is provided with code examples for PHP, Python, jQuery, Go, and Ruby. To start integrating all you need to do is register for a free access key.

Once you have your free access key, you connect to the API, then customize the results you receive with simple parameters. You can specify the types of news, the precise sources (including omitting sources), languages, countries, and most importantly your keywords.

For example here’s how you’d request science news from CNN, but not TMZ:

https://api.mediastack.com/v1/news

?access_key=[ INSERT YOUR ACCESS KEY HERE ]

&categories=science

&sources=cnn,-tmz

Let’s say you want to display Spanish language crypto news on your site, it couldn’t be easier:

https://api.mediastack.com/v1/news

?access_key=[ INSERT YOUR ACCESS KEY HERE ]

&categories=business,technology

&languages=es

&search=crypto,bitcoin,btc,xrp,ripple,etherium,altcoin

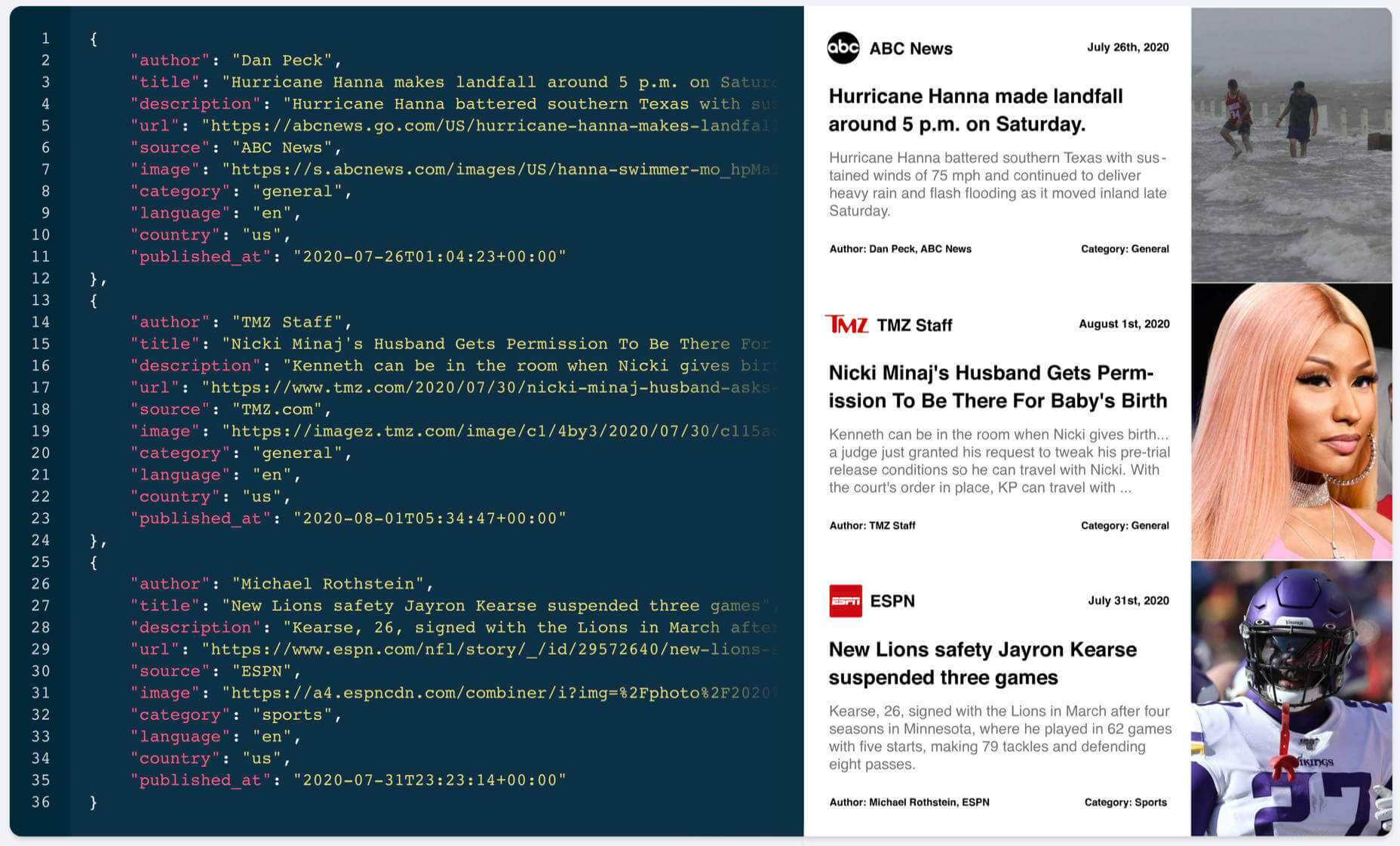

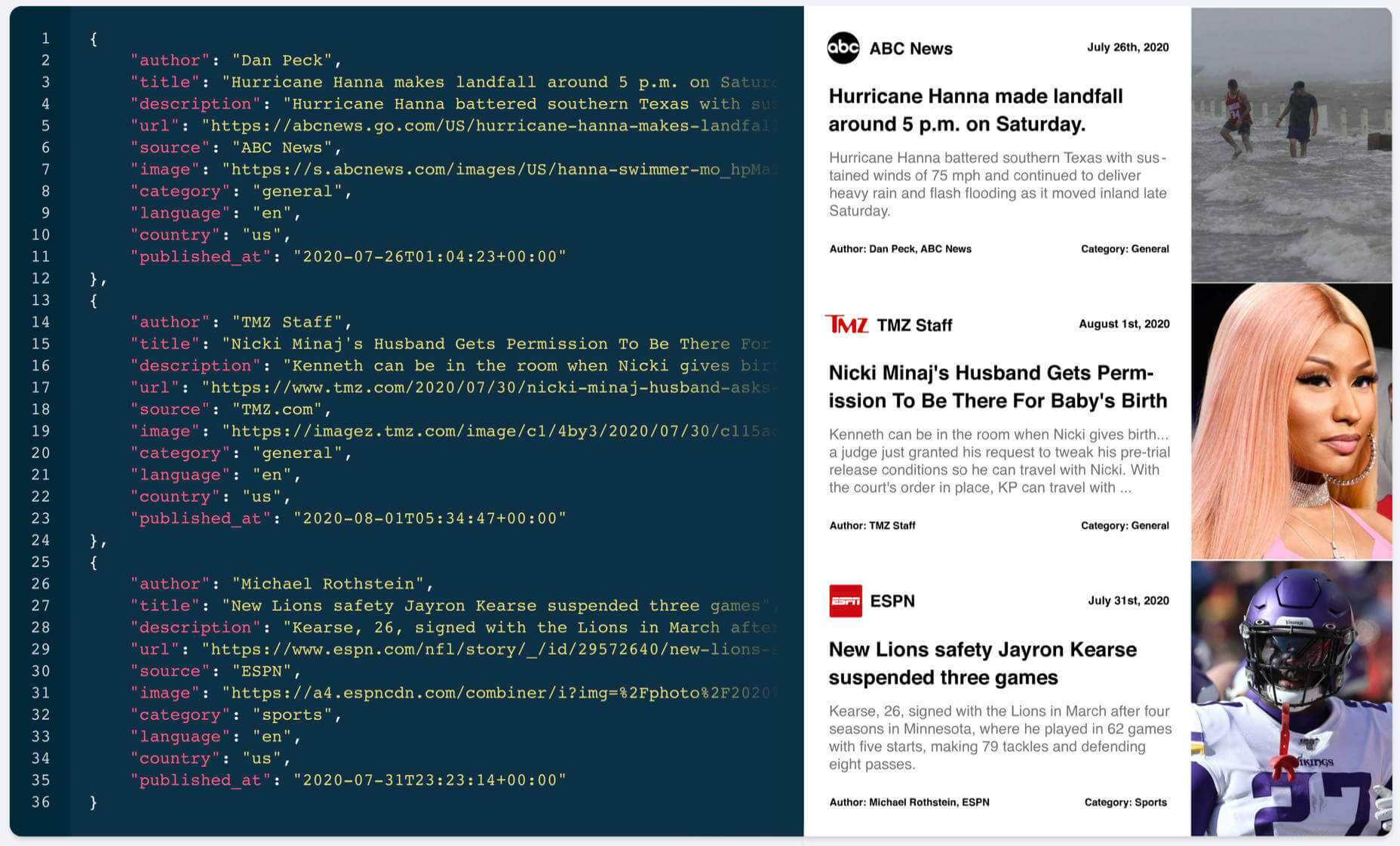

The API sends back simple JSON data that’s easy to run through. Each news item includes the author, title, description, url, source, image, category, language, country, and a published_at timestamp that records when the story was posted.

Once the feed is setup, sit back and relax. It’s all automated from now on.

The Best Source of News for Your Website

mediastack is delivered by apilayer, quite rightly one of the most trusted names in APIs, and is capable of handling millions of requests simultaneously.

Fast, updated by the minute, highly customizable, reliable, and sourced from the biggest names in the news industry, mediastack is an amazing API.

There’s a free-forever plan that allows you to use the API without charge, for up to 500 API calls per month, that’s perfect for trying it out.

For commercial use, plans start at just $19.99/month, and can handle up to 250,000 calls per month. Commercial plans also include HTTPS encryption, live news delivery, access to historical data, and — should you ever need it — technical support.

Head over to mediastack today, to prepare your site for whatever events 2021 throws at us.

[– This is a sponsored post on behalf of mediastack –]

Source

Source de l’article sur Webdesignerdepot

What an extraordinary year 2020 has been for the news! From the ongoing coronavirus crisis, to a turbulent US election, to the unrelenting march of Bitcoin, this year like no other we’ve been glued to our phones micro-analyzing every tidbit of news.

What an extraordinary year 2020 has been for the news! From the ongoing coronavirus crisis, to a turbulent US election, to the unrelenting march of Bitcoin, this year like no other we’ve been glued to our phones micro-analyzing every tidbit of news.