I had the opportunity to meet with Marco Palladino, CTO and Co-founder, Kong on a recent trip to San Francisco to discuss their decision to open source their universal service mesh, Kuma.

Two major announcements over the last few days in the Java community! Today, the Eclipse Foundation announced both the Jakarta EE 8 release and Eclipse Che 7 release. And it’s all about the cloud!

You may also like: Jakarta EE and Beyond

Jakarta EE 8

Two years after Oracle handed over Enterprise Java to the Eclipse Foundation, they provided Jakarta EE, and since then, they have released version 8. As its name suggests, this version is compatible with Java EE 8, but is now completely open-source, and therefore, royalty-free.

GitLab Pages is a way to create websites for projects and groups in order to publish documentation, wikis, or any static content. Sometimes, for resource limitation, decreasing the load on the main GitLab instance (if self-hosted), to increase security, or for separating docs and wikis from code, we need to host our GitLab Pages in a separate server. To achieve this, we should have two GitLab instance on two distinct machines: one of them is our main GitLab (a normal GitLab installation) and the other one is an instance only for publishing GitLab Pages.

This is a tutorial and provides some technical information and configurations, We assume you are familiar with GitLab installation and GitLab Pages, and already have one GitLab self-managed instance (on-premises or in the cloud) in use.

Introduction

Some time ago, I came across this life-cycle management tool (or cloud service) called Valohai, and I was quite impressed by its user-interface and simplicity of design and layout. I had a good chat about the service at that time with one of the members of Valohai and was given a demo. Previous to that, I had written a simple pipeline using GNU Parallel, JavaScript, Python, and Bash — and another one purely using GNU Parallel and Bash.

I also thought about replacing the moving parts with ready-to-use task/workflow management tools like Jenkins X, Jenkins Pipeline, Concourse or Airflow, but due to various reasons, I did not proceed with the idea.

Released by Facebook in 2013, React has steadily become an industry-standard library for front-end development. Per Stack Overflow’s most recent Developer Survey, React sits at the top of the list for both developer’s most "loved" and "wanted" web library/framework of 2019. This popularity is largely owed to React’s component-based functionality, which allows developers to create dynamic, user-friendly interfaces with reusable elements for single-page applications (SPAs).

In this "Best of DZone" compilation, we’re going to break down React by providing articles that introduce the library, compare it to similar frameworks (Angular and Vue.js), and take a look at key concepts, such as components, props, virtual DOM, and state management. Then, we’ll present tutorials, beginning with simple, "Hello, World" applications, moving to more technically dense, niche topics, and finishing with a few long-term projects.

With the React VR framework, you are now able to build VR web apps. WebVR is an experimental API enabling the creation and viewing of VR experiences in your browser. The goal of this new technology by Oculus is granting access to virtual reality to everybody regardless of the devices at hand.

The only thing you need to make a React VR app is a headset and a compatible browser. No need even for a headset when you are just viewing a web VR application. React VR is a great framework to build VR websites or apps on JavaScript. It utilizes the same design as React Native and lets you make virtual reality tours and user interfaces with the provided components.

Caching With Entity Framework

The Entity Framework is a set of technologies in ADO.NET that support the development of data-oriented software applications. With the Entity Framework, developers can work at a higher level of abstraction when they deal with data and can create and maintain data-oriented applications with less code than in traditional applications.

NCache introduces the caching provider, which acts between Entity Framework and the Data source. The major reason behind the EF Caching provider is to reduce database trips (which slow down application performance) and serve the query result from the cache. The provider acts in between the ADO.NET entity framework and the original data source. Therefore, the caching provider can be plugged without changing/compiling the current code.

As businesses increasingly rely on data to fuel their daily operations, the need for protecting this data is at an all-time high. Systems, processes, and physical assets all need to be secured as part of a company’s overall data security plan.

There are many ways through which business information can be protected against threats. Some techniques involve securing data from cybercriminals, while others involve offline processes such as locking file cabinets, maintaining access control to specific rooms, and setting up employee guidelines during daily operations.

The Challenge

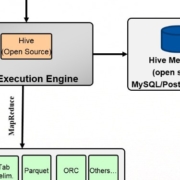

The idea of the traditional data center being centered on relational database technology is quickly evolving. Many new data sources exist today that did not exist as little as 5 years ago. Devices such as active machine sensors on machinery, autos and aircraft, medical sensors, RFIDs, as well as social media and web click-through activity are creating tremendous volumes of mostly unstructured data, which cannot possibly be stored or analyzed in traditional RDMS’s.

These new data sources are pushing companies to explore the concepts of Big Data and Hadoop architecture, which is creating a new set of problems for corporate IT. Hadoop development and administration can be complicated and time-consuming. Developing the complex MapReduce programs to mine this data is a complicated and very specialized skill. Companies need to invest in training their existing personnel or hire people specializing in MapReduce programming and administration. This is the very reason many enterprises have been hesitant to invest in big data applications.

With the MicroProfile-Config API, there is a new and easy way to deal with configuration properties in an application. The MicroProfile-Config API allows you to access config and property values from different sources, like:

- System.getProperties() (ordinal=400)

- System.getenv() (ordinal=300)

- all META-INF/microprofile-config.properties files

Developers can find a good introduction into the MicroProfile Config API here. Of course, developers can also implement your own config source. However, most of the examples are based on reading custom config values from an existing file, like in the example here.