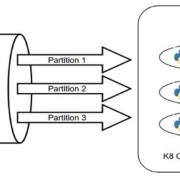

Two of the most popular message brokers used today are Kafka and those based around JMS. JMS is a long-standing Java API used generally for developing messaging applications, with its primary function of being able to send messages between two or more clients. Kafka, on the other hand, is a distributed streaming platform that provides a lot of scalabilities and is useful for real-time data processing.

While both offer their own advantages and are highly useful in their own right, which of the two should you be actually using?