Since the skeuomorphism of the early 00s, the design trend of choice has been minimalism. In fact, minimalism has been de rigueur for substantially longer than that.

Since the skeuomorphism of the early 00s, the design trend of choice has been minimalism. In fact, minimalism has been de rigueur for substantially longer than that.

You can make a fair case that minimalism was the defining theme of the 20th century. Beginning with the widespread adoption of grotesque typefaces in mass advertising the century before, continuing through the less-is-more philosophies of the middle part of the century, and culminating in the luxury of 80s and 90s consumerism.

Minimalism has been central to the design practice of almost every designer that we recognize as a designer. It underpins the definition of the discipline itself.

With the weight of such illustrious history, it’s no wonder that the fledgling web — and in the scale of history, the web is still a very new phenomenon — adopted minimalism.

And then there’s the fact that a minimalist approach works on the web. Multiple viewports, multiple connection speeds, multiple user journeys, all of these things are so much easier to handle if you reduce the number of visual components that have to adapt to each context.

And yet, despite this, an increasing number of designers are abandoning minimalism in favor of a more flamboyant approach where form is function. It’s clearly happening. What’s not clear is whether this is a short-lived, stylistic fad or something altogether more fundamental. In other words, are designers about to abandon grids, or are they just slapping some gradients on an otherwise minimal design?

Featured image via Pexels.

Source

The post Poll: Is Minimalism a Dead Trend Walking? first appeared on Webdesigner Depot.

Source de l’article sur Webdesignerdepot

Since the skeuomorphism of the early 00s, the design trend of choice has been minimalism. In fact, minimalism has been de rigueur for substantially longer than that.

Since the skeuomorphism of the early 00s, the design trend of choice has been minimalism. In fact, minimalism has been de rigueur for substantially longer than that.

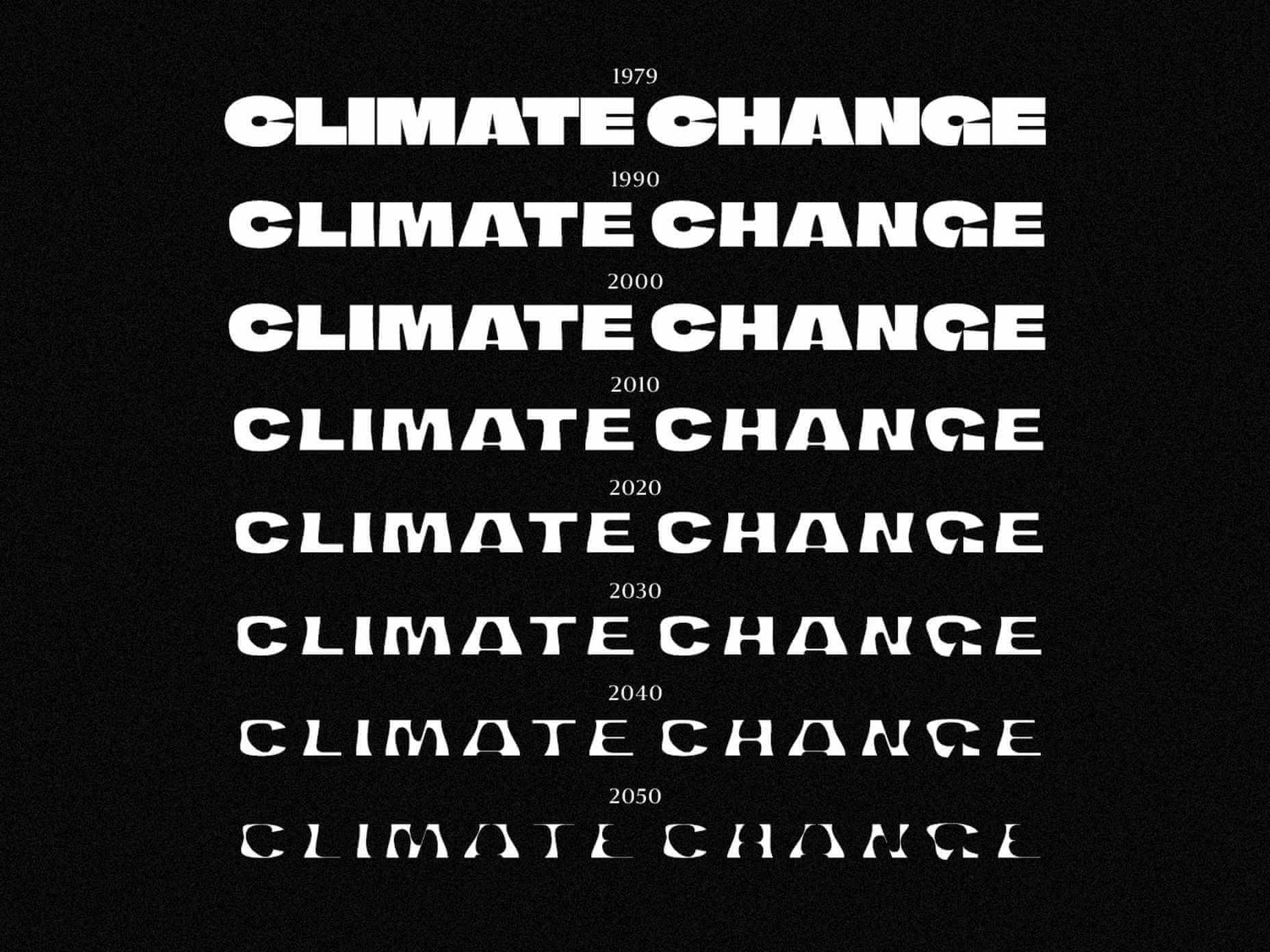

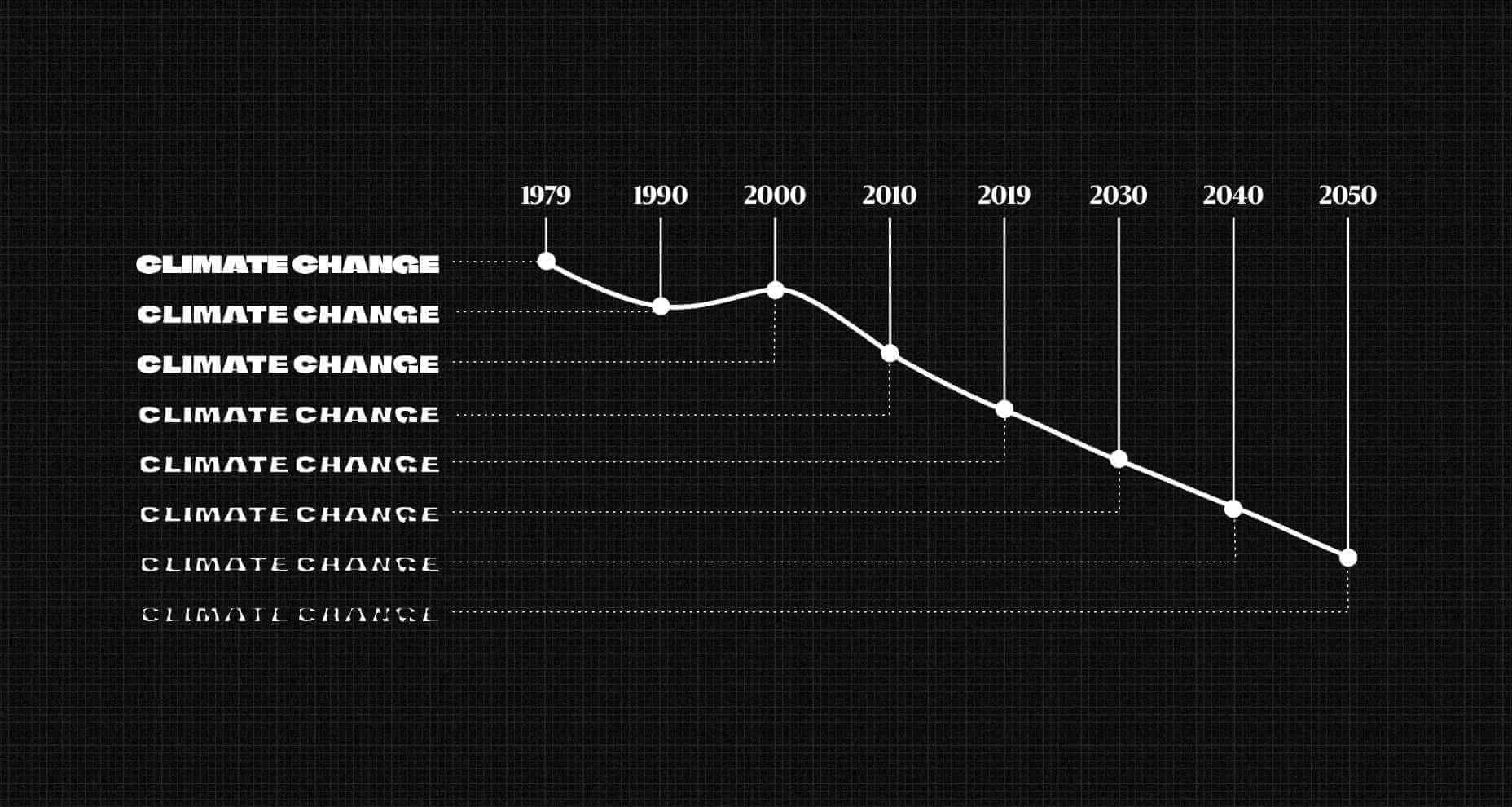

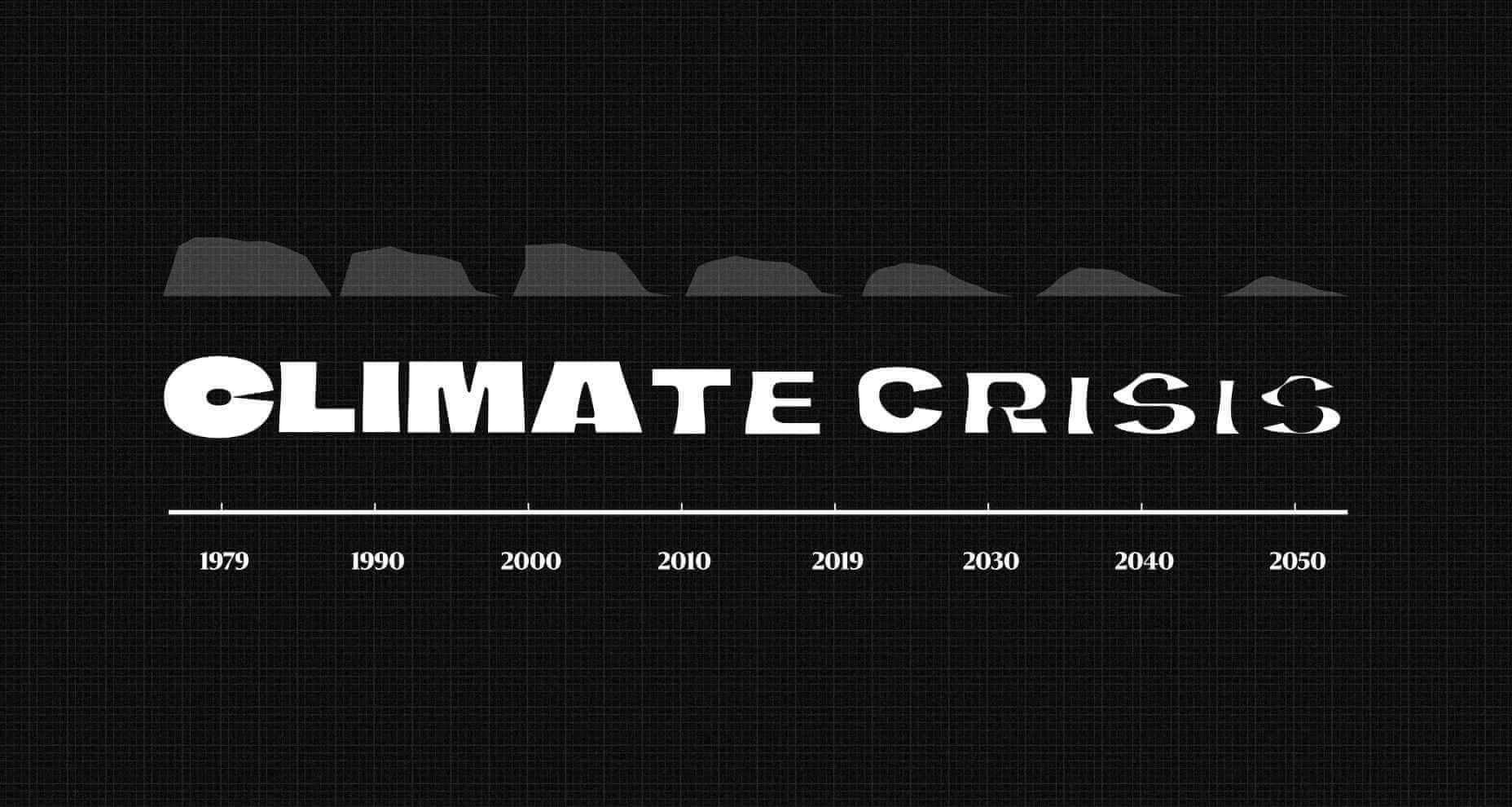

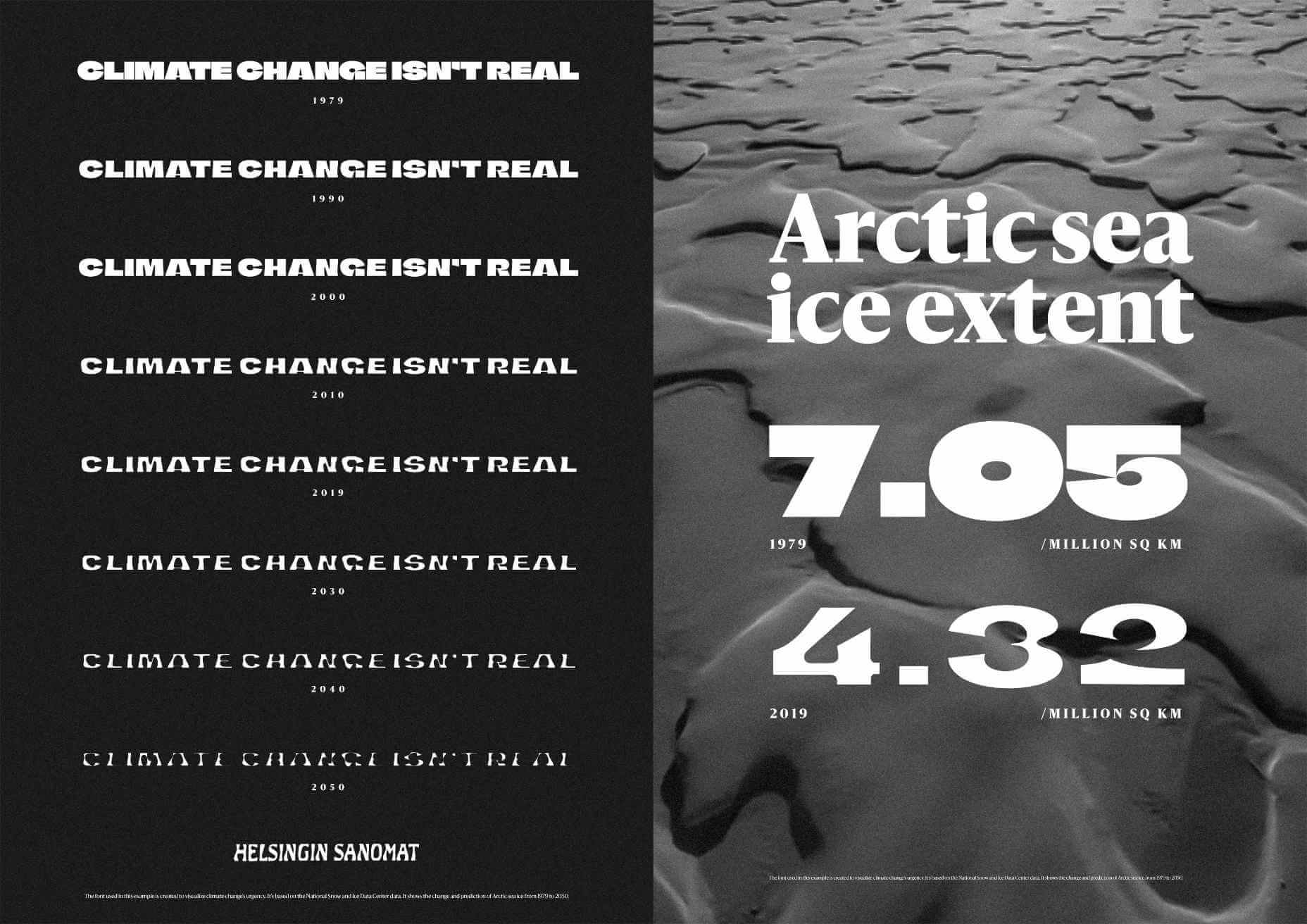

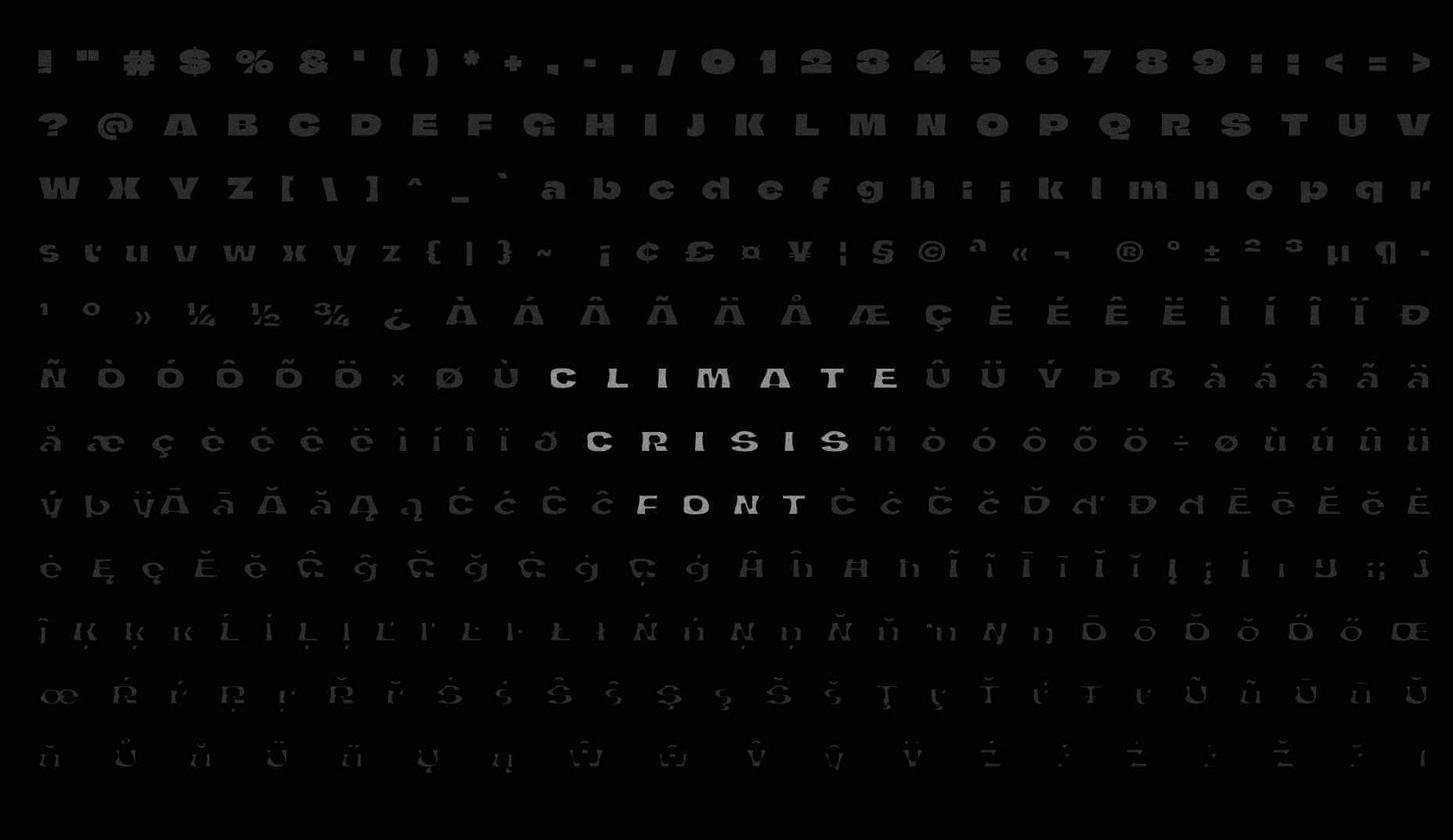

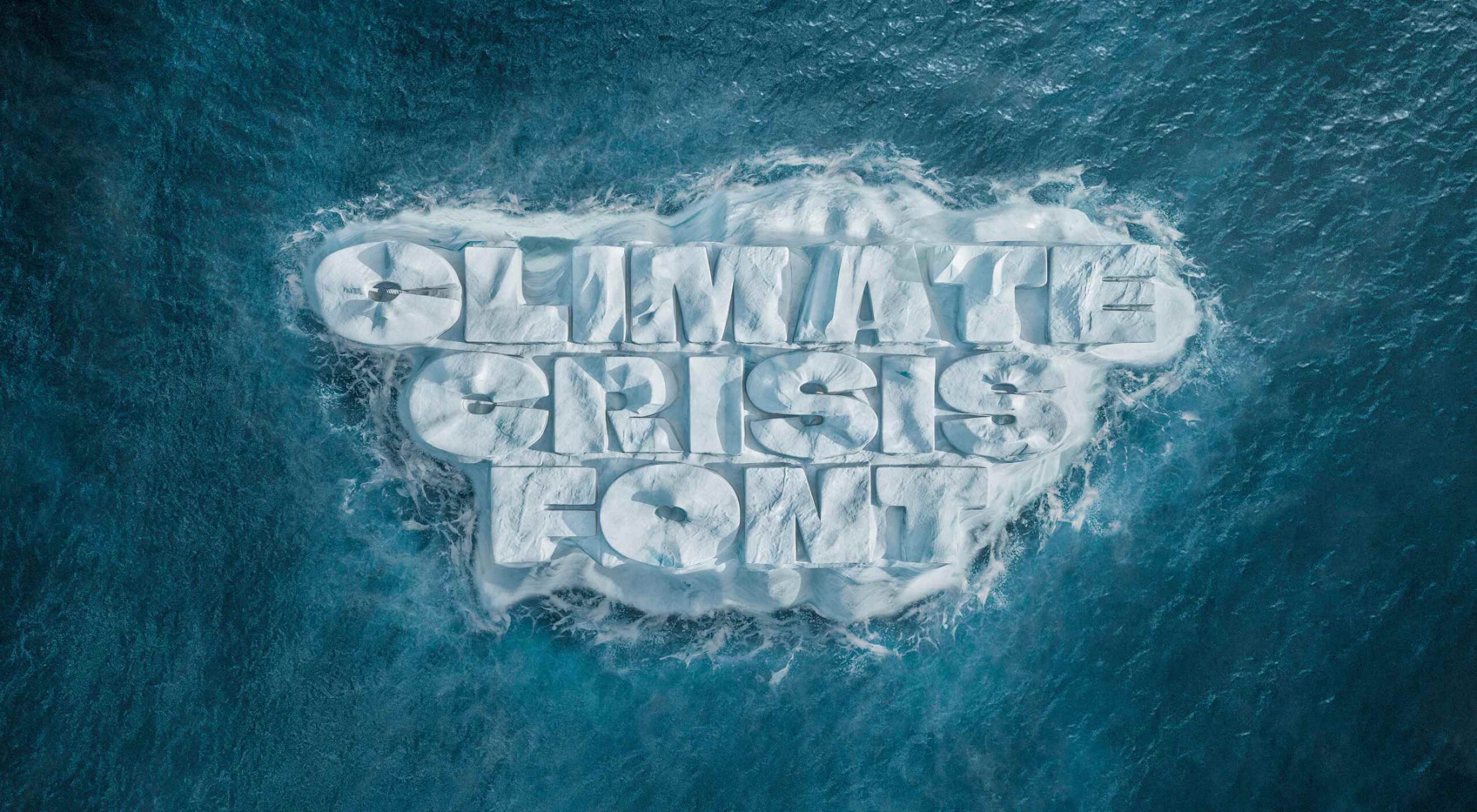

Finnish newspaper Helsingin Sanomat has developed

Finnish newspaper Helsingin Sanomat has developed