Enterprises of all sizes are embracing the rapid modernization of user-facing applications as part of their broader digital transformation strategy. The relational database (RDBMS) infrastructure that such applications rely on suddenly needs to support much larger data sizes and transaction volumes. However, a monolithic RDBMS tends to quickly get overloaded in such scenarios. One of the most common architectures to get more performance and scalability in an RDBMS is to “shard” the data. In this blog, we will learn what sharding is and how it can be used to scale a database. We will also review the pros and cons of common sharding architectures, plus explore how sharding is implemented in distributed SQL-based RDBMS like YugaByte DB.

What Is Data Sharding?

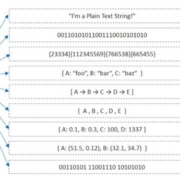

Sharding is the process of breaking up large tables into smaller chunks called shards that are spread across multiple servers. A shard is essentially a horizontal data partition that contains a subset of the total data set, and hence is responsible for serving a portion of the overall workload. The idea is to distribute data that can’t fit on a single node onto a cluster of database nodes. Sharding is also referred to as horizontal partitioning. The distinction between horizontal and vertical comes from the traditional tabular view of a database. A database can be split vertically — storing different table columns in a separate database, or horizontally — storing rows of the same table in multiple database nodes.